As assessment has matured, we now see many programs and units have been developing mission statements, goals, outcomes, and measures for years. Surprisingly there is one part of the process that continues to be challenging – setting targets.

An achievement target answers the question, “What are you looking for? What would success look like?” It is the result, target, benchmark, or value that will represent success at achieving a given outcome. Sounds simple, right?

However, it can be fraught with complexities. It can depend on the discipline, the type of measure, timing in a cycle, and misconceptions about consequences for unmet targets.

Dr. Sara J. Finney, Professor in the Department of Graduate Psychology at James Madison University, delves into this in her webinar, “Using Implementation Fidelity Data to Evaluate & Improve Program Effectiveness,” which is the basis for this article. She points out that often there is a lack of knowledge of the programming students actually received. How can we expect faculty and staff to set targets and improve a program without knowing what is actually (or not actually) occurring in the program?

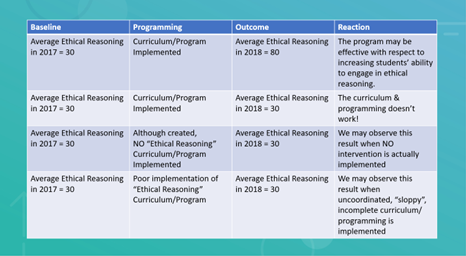

For example:

So…which is it?

- Program doesn’t “work”

- Program wasn’t implemented

- Program was implemented but poorly

A better way to set targets is to consider implementation fidelity methodology.

For Institutional Effectiveness, it fits in between the third and fourth steps of the assessment cycle:

- Identify outcomes

- Tie programming to outcomes

- Select instruments for measurement

- IMPLEMENTATION FIDELITY

- Collect data

- Analyze and interpret data

- Use results to make decisions (close the loop)

An analogy of a “black box” is helpful when explaining the premise. We conceptualize a program as:

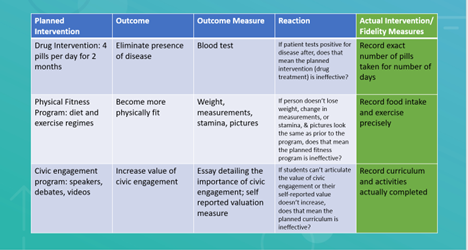

Planned Intervention → Outcome → Outcome Measure

In fact, we need to know the actual intervention, which is usually unknown (in a black box). To open the box and see the actual intervention we use implementation fidelity.

Adding the implementation fidelity step to the process and assessing if the actual intervention is being measured yields more reliable data that can then be used to set appropriate targets and inform program decisions.

Additional Resources: